Run scalable, reliable AI/ML inference on Kubernetes with MLflow, KServe, and AutoML, and explore deployment, orchestration, and performance at scale.

As the industry pushes toward more intelligent and integrated operations, platform engineering and internal developer platforms are helping teams address issues like Kubernetes tool sprawl, while AI continues cementing its usefulness for optimizing cluster management, observability, and release pipelines.

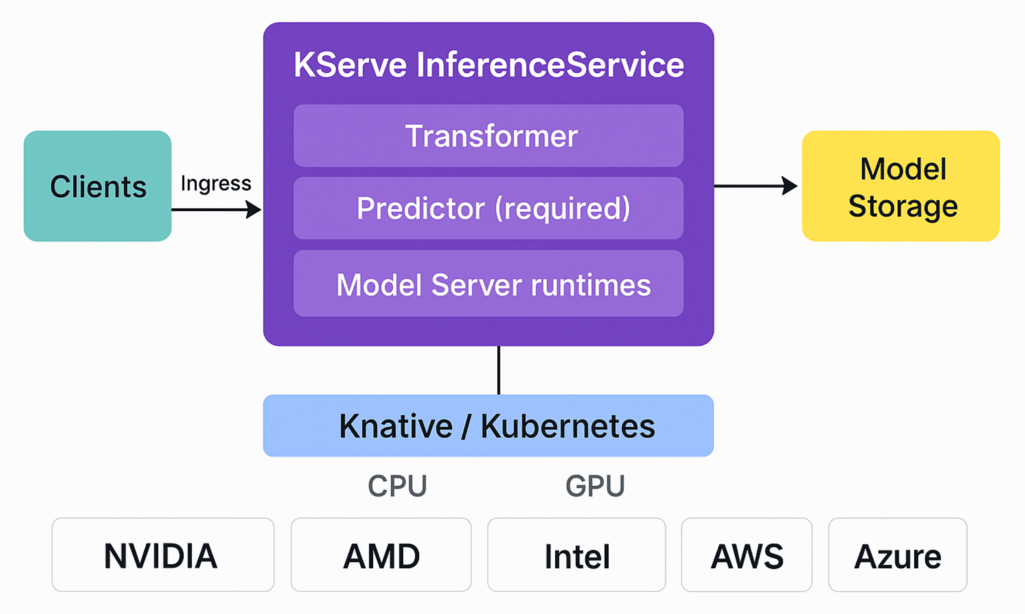

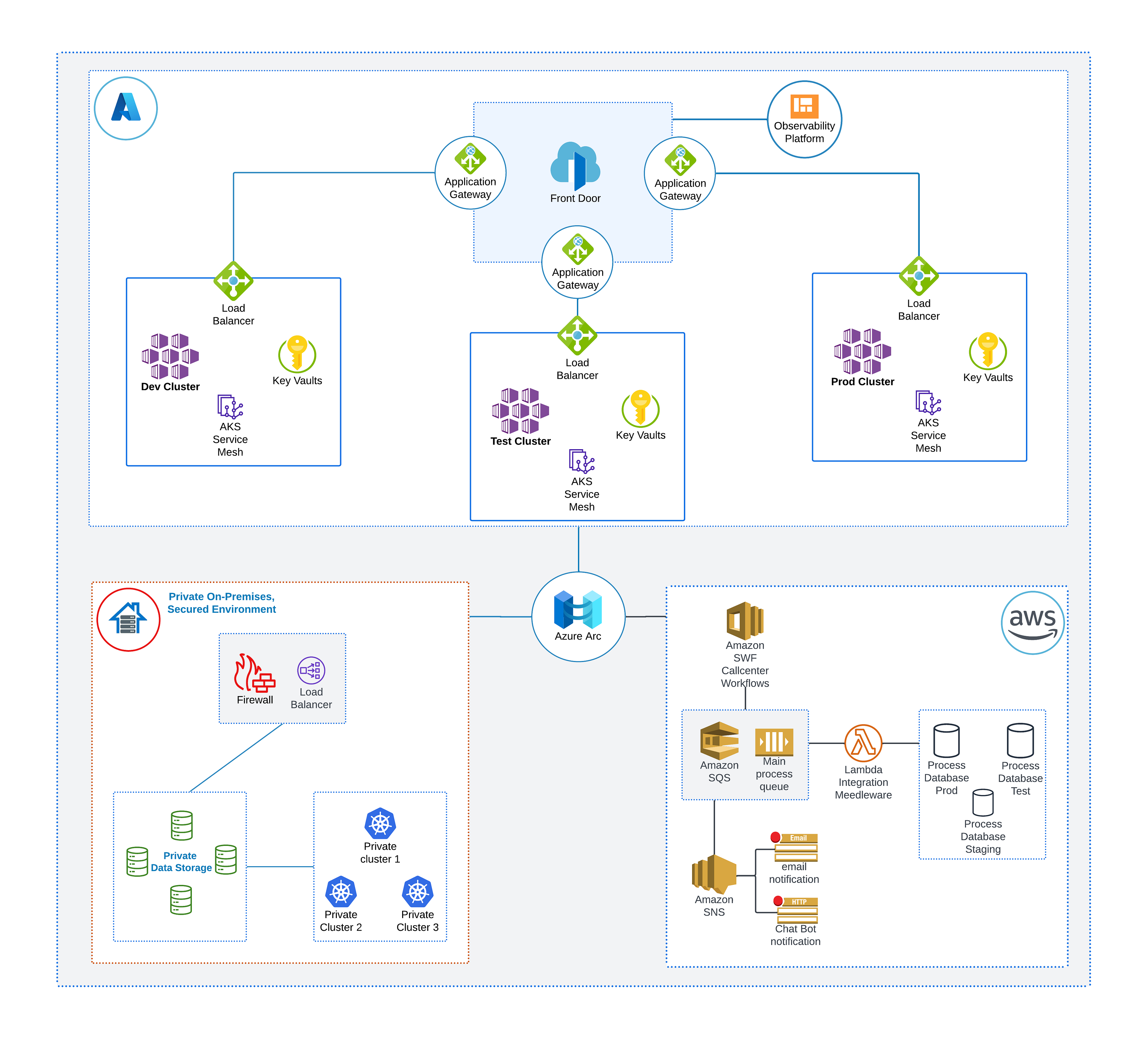

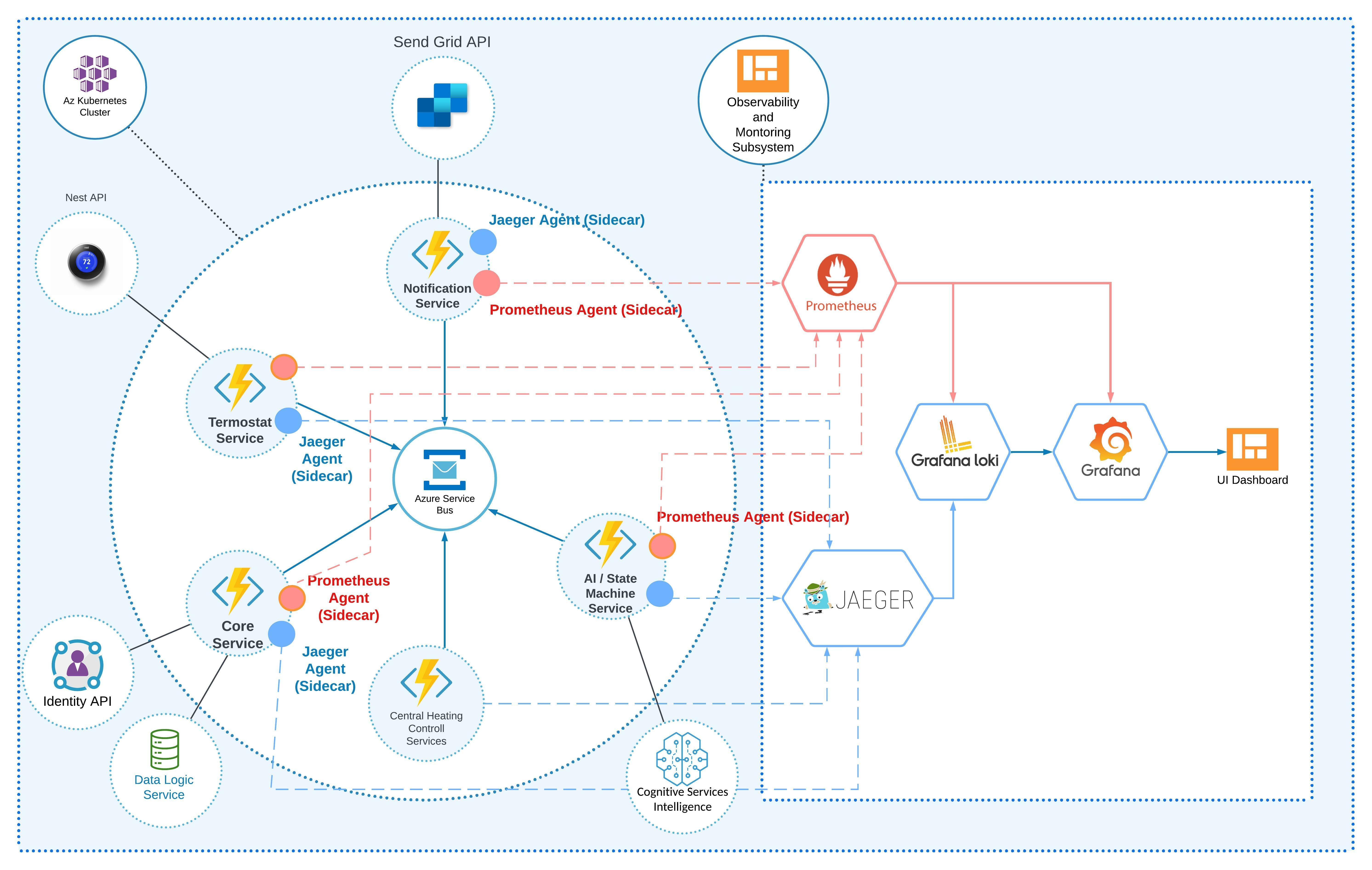

Running artificial intelligence and machine learning (AI/ML) on Kubernetes gives us a scalable, portable platform for training and serving models. Kubernetes schedules GPUs and other resources so that we can pack workloads efficiently and autoscale to match traffic for both batch jobs and real-time inference. It also coordinates multi-component stacks — like model servers, preprocessors, vector DBs, and feature stores — so that complex pipelines and low-latency endpoints run reliably.

Containerization enforces reproducible environments and makes CI/CD for models practical. Built-in capabilities like rolling updates, traffic splitting, and metrics/tracing help us run safe production rollouts and meet SLOs for real-time endpoints. For teams that want fewer operations, managed endpoints exist, but Kubernetes is the go-to option when control, portability, advanced orchestration, and real-time serving matter.

This Article part of [Trend Report] Kubernetes in the Enterprise

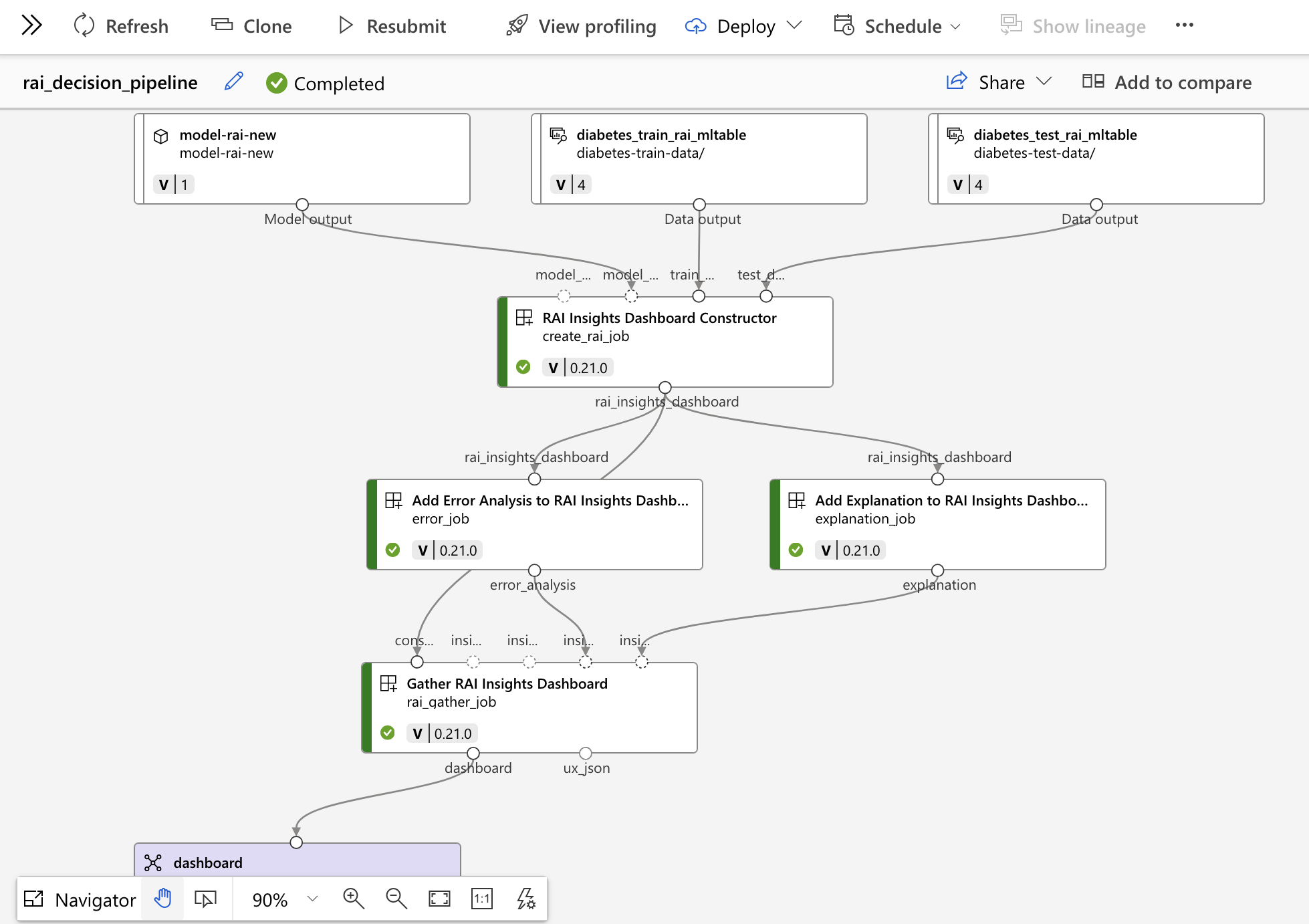

[Git Repository] azure-ml-ai-foundry-labs-hub

Hands-on Azure ML & Microsoft AI Foundry notebooks and artifacts: training, MLflow, pipelines, endpoints, RAI dashboards, privacy/smart noise, RAG with Azure AI Search, LLM fine-tuning, plus cheatsheets for ML/LLM metrics and core concepts.

A Comprehensive Guide to Protect Data, Models, and Users in the GenAI Era

Generative AI (GenAI) is transforming how organizations operate, enabling automation, content generation, and intelligent decision-making at an unprecedented scale. From AI-powered chatbots to advanced code generation and creative design, GenAI is revolutionizing industries by increasing efficiency and innovation. However, alongside these advancements come significant security risks that organizations must address.

From Zero Trust to AI Integration — Innovations in Safeguarding Sensitive Information

Access and secrets management involves securing and managing sensitive information such as passwords, API keys, and certificates. In today’s cybersecurity landscape, this practice is essential for protecting against breaches, ensuring compliance, and enhancing DevOps and cloud security. By implementing effective secrets management, organizations can reduce risk, improve operational efficiency, and respond to incidents more quickly. For developers, it provides a secure, convenient, and collaborative way to handle sensitive information, allowing them to focus on coding without worrying about the complexities of secure secrets handling.